VicomTTS: automatic text-to-speech based on neural networks

VicomTTS is an advanced neural network-based synthesis engine designed for text-to-speech generation in Basque. The platform currently offers three Basque voices (Nerea, Miren and Jon) as well as voices in Spanish (Mikel), French (Garazi) and English (Anne).

Tactical objectives

This solution serves as a digital service to promote the use of Basque as a minority language. To achieve this, we have developed a versatile service, accessible through REST API calls. The service was developed in Python, using the PyTorch engine for efficient execution of synthesis models.

Methods

Citizens can conveniently access the solution via web app, mobile app or extension without the need for additional data. In cases where government departments require direct API access, a unique API key is provided for identification purposes. To ensure continuous performance monitoring and regular testing, the service is actively monitored using DataDog. In addition, Istio can be used to deploy new versions of the service using mirroring or canary strategies.

Functional requirements

It took few months from initial testing to deployment in a production environment. The service started with two voices: Nerea in Basque and Jon in Spanish. Subsequently, significant improvements were made, such as the integration of Istio and architectural enhancements to improve performance. Regular reviews and training updates are carried out annually to maintain and improve voice quality and realism. Additional voices, namely Miren and Jon, were added to provide greater variety. This involved professional studio recording and innovative voice creation techniques.

The development and improvement process involved working with Vicomtech, an artificial intelligence company, as the Basque Government's IT department lacked the expertise to create such models. The project involved a team of six people, including a project manager, a developer, an AI specialist, a DevOps specialist, a systems analyst and a QA specialist.

Results and impact

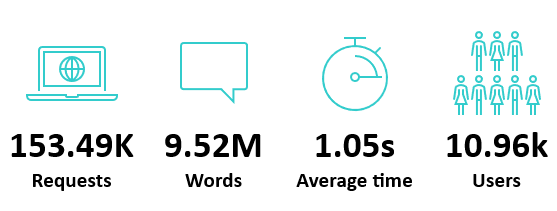

Although service usage has not met initial expectations, we are seeing a steady increase in daily usage and the service is gradually gaining popularity. We are currently processing an average of 5000 requests per day. According to our statistics, 50% of the requests are handled with a response time of less than 1 second, ensuring a highly reliable service with a success rate of 99.7%.

Dependencies and constraints

To address infrastructure challenges, we used Red Hat Openshift as the Kubernetes platform to ensure scalability and self-healing capabilities. In addition, graphics cards (GPUs) have been installed in the Basque Government's Data Processing Centre (DPC) to support the deployment of the artificial intelligence models created. The integration of NVIDIA software (CUDA drivers), VMWare virtual machines with vGPU support and the development of AI models using PyTorch were also key components of the solution.